Xinyuan Wang

Hi! I am Xinyuan Wang (王心远).

I am a Ph.D. student at XLANG Lab, the University of Hong Kong, supervised by Prof. Tao Yu.

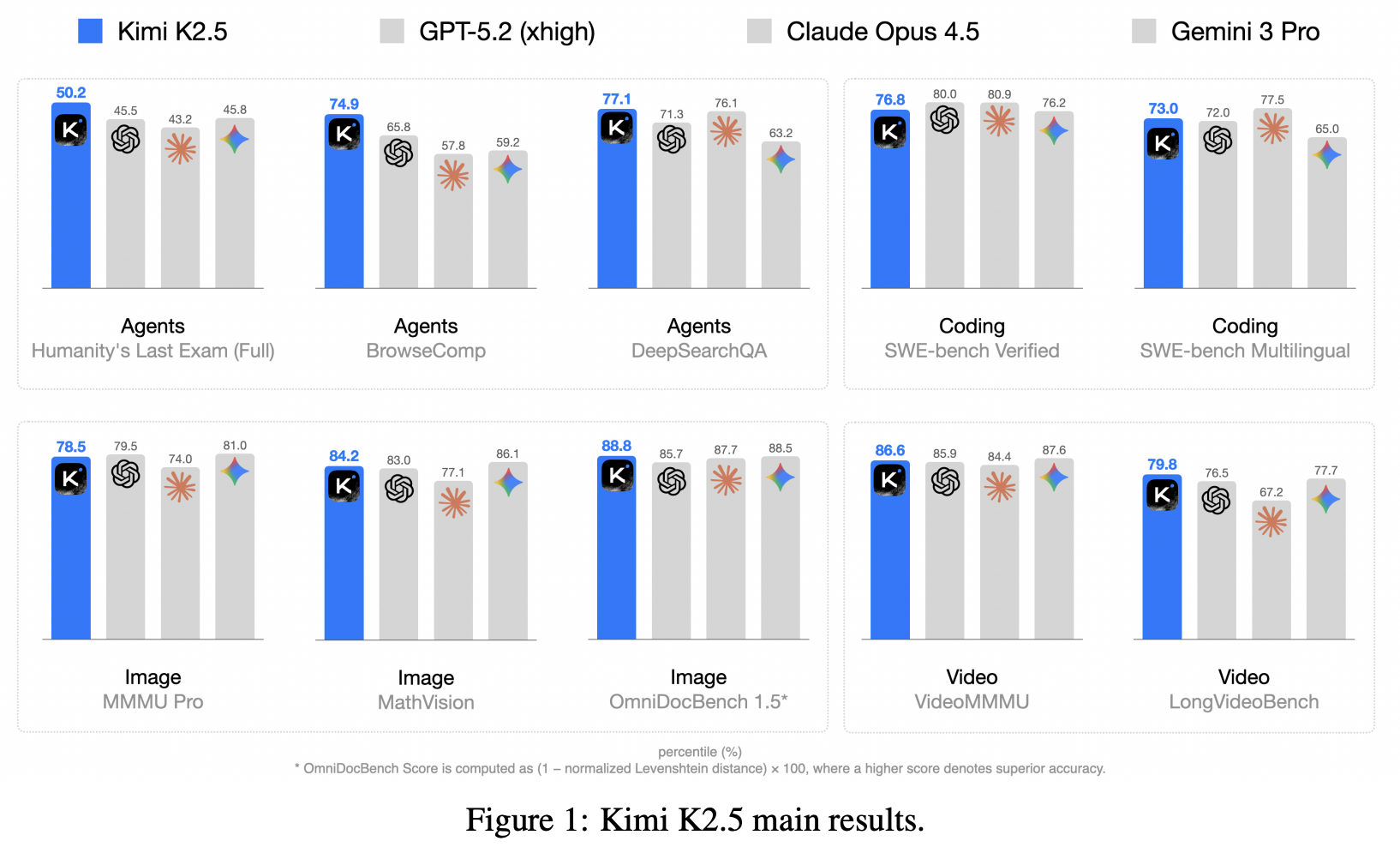

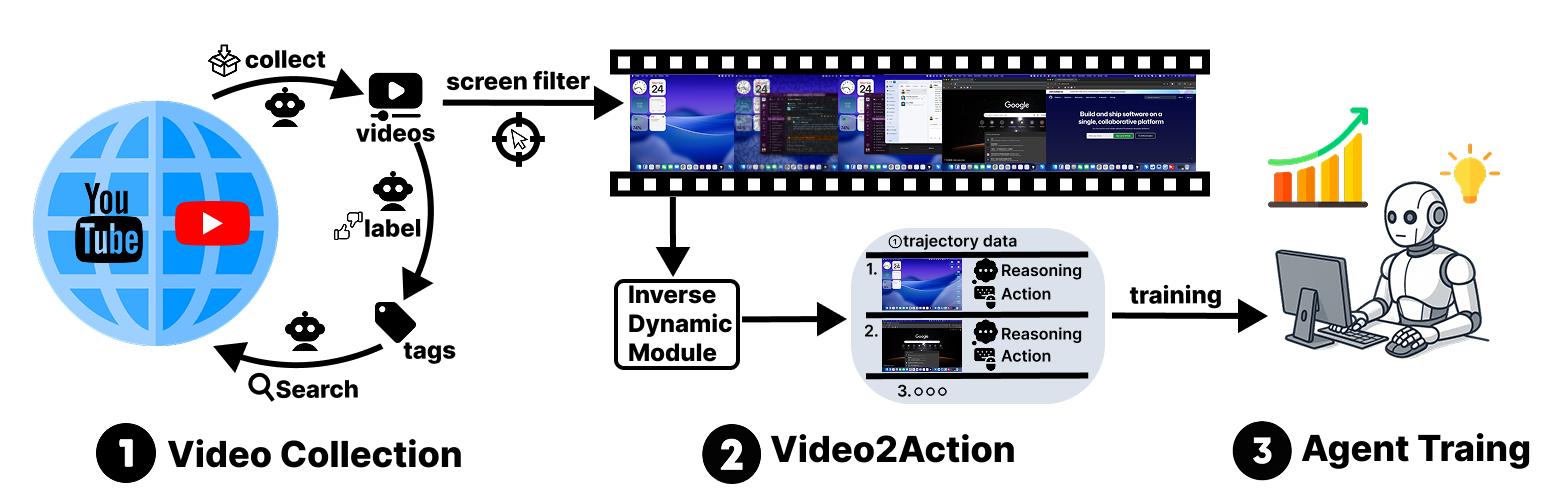

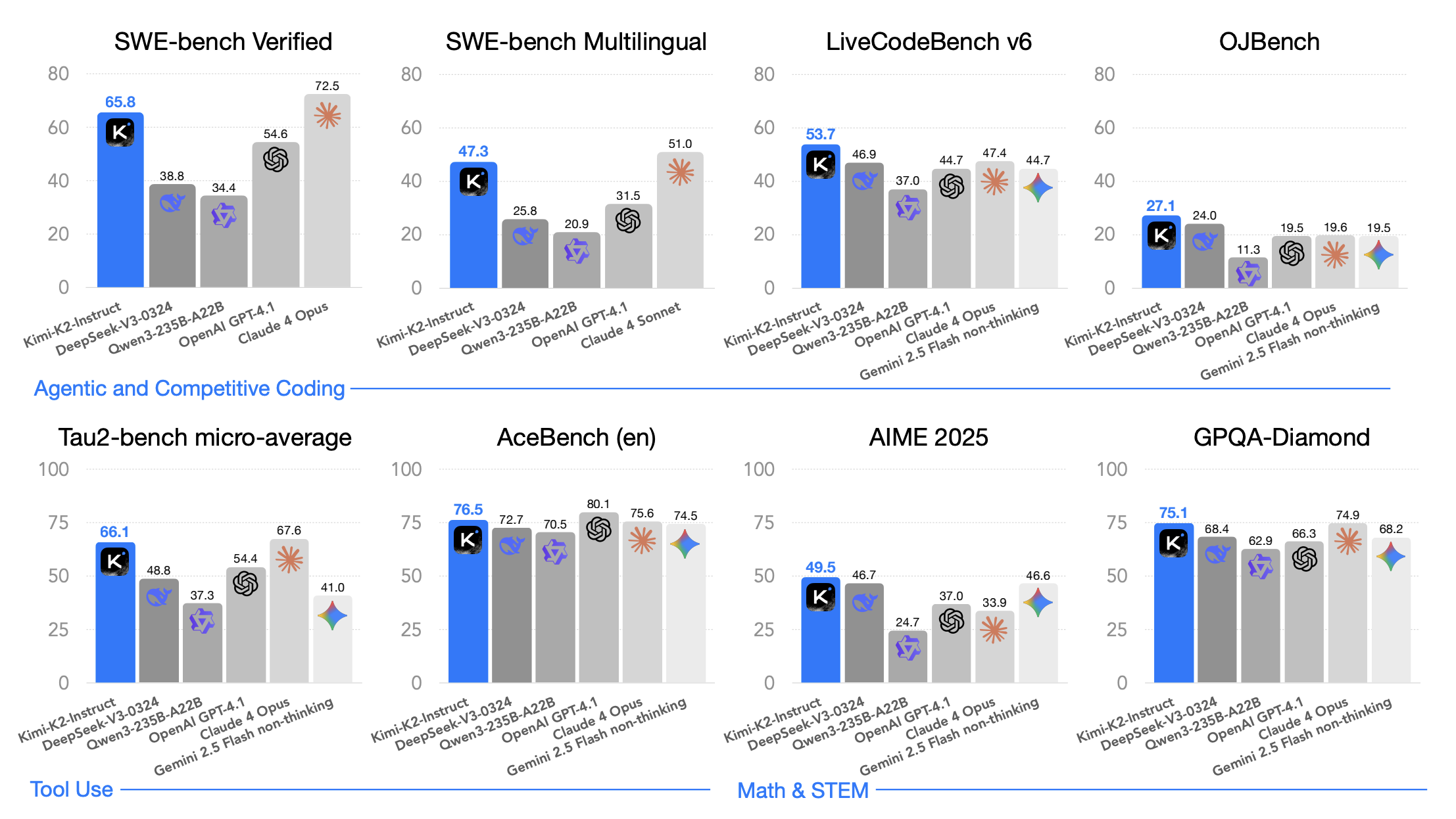

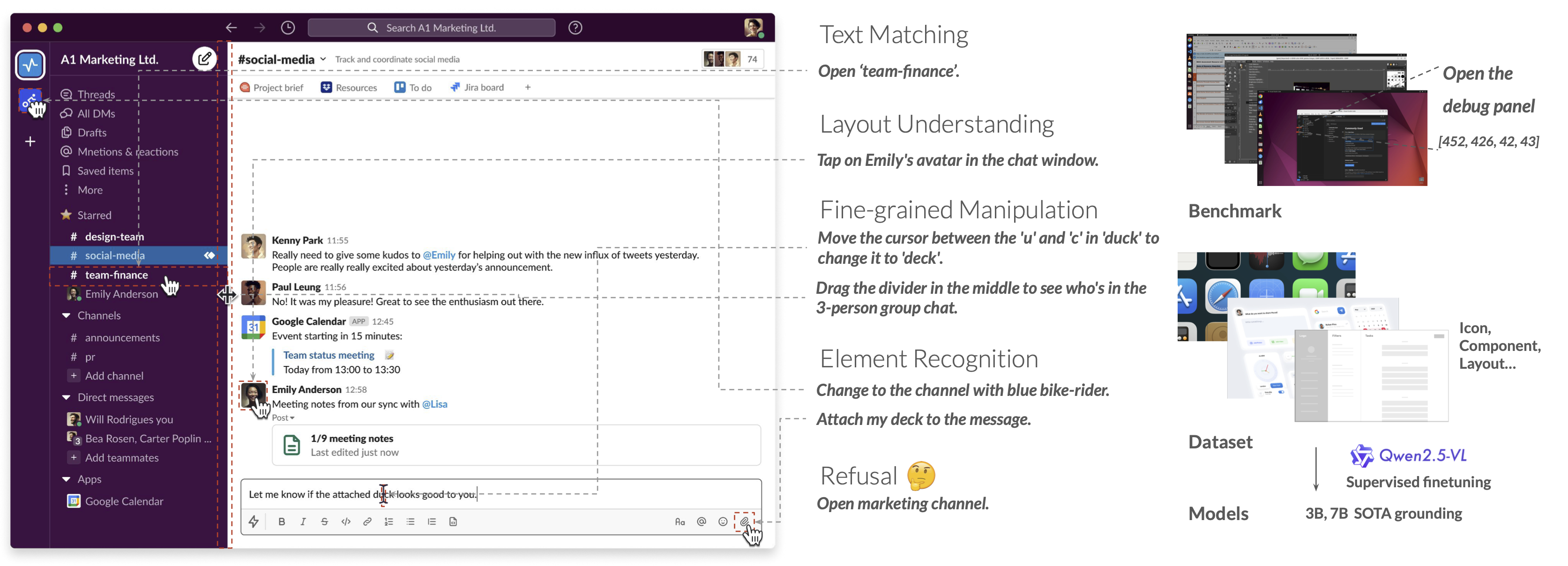

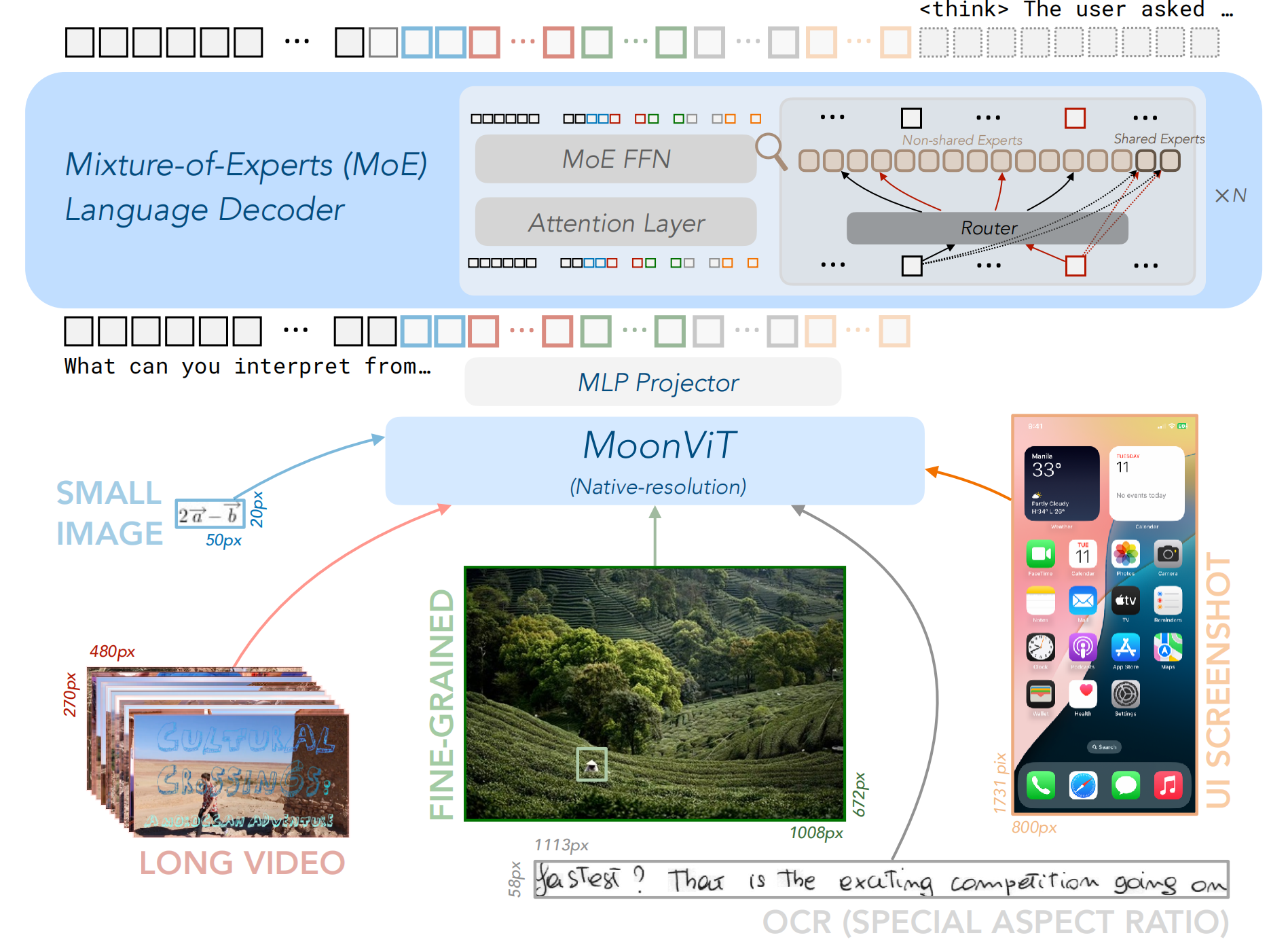

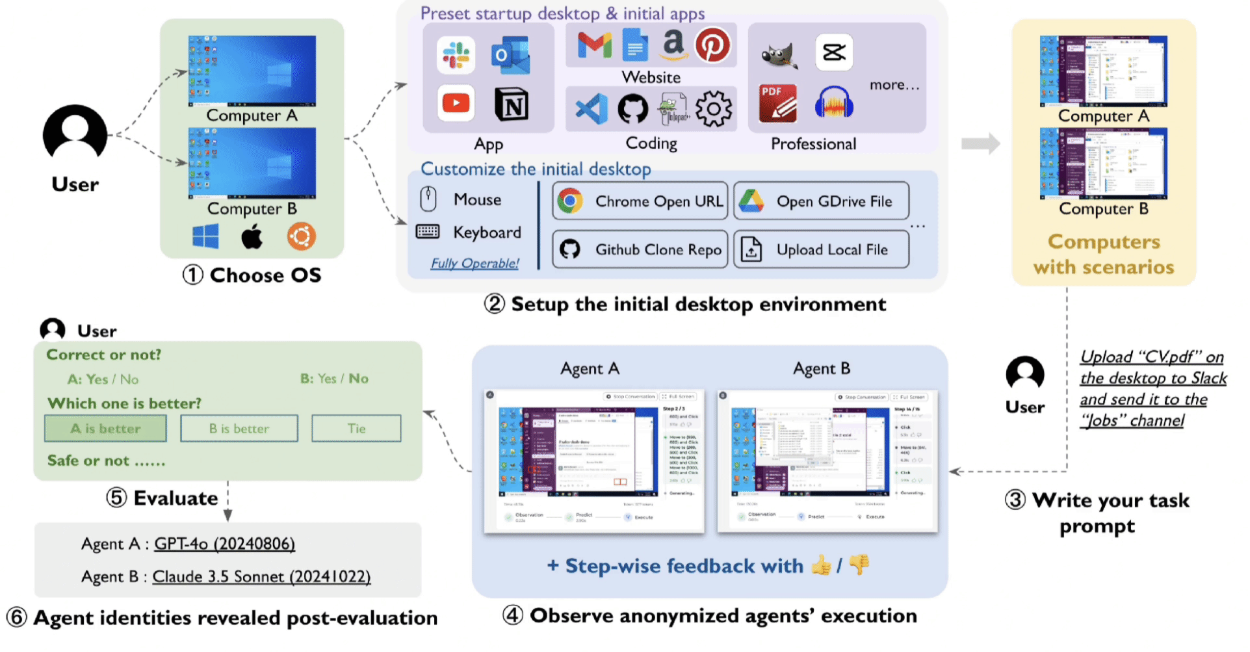

I am now working on agentic foundation models, especially computer-use agent models (Kimi K2.5, OpenCUA, Kimi K2 and Kimi-VL), agent evaluation (Computer Agent Arena, OSWorld-Verified), and agent data synthesis (Jedi, VideoAgentTrek). At UCSD, I worked on automatic LLM prompt optimization (PromptAgent) and LLM Reasoning (LLM Reasoners). I also worked in Prof. Zhuowen Tu’s group, exploring how to improve diffusion models’ conceptual performance.

News

| Jan 31, 2026 | Kimi K2.5 is released! Ranked #1 on OSWorld leaderboard — the strongest open agentic model. Happy to be part of the team! |

|---|---|

| Jan 26, 2026 | Computer Agent Arena and VideoAgentTrek are accepted by ICLR 2026! |

| Oct 11, 2025 | 🎉 OpenCUA received the Best Paper Award at the COLM AIA Workshop! |

| Sep 19, 2025 | OpenCUA and Jedi are accepted by NeurIPS as Spotlight paper! |

| Sep 19, 2025 | OpenCUA is accepted by COLM 2025 Workshop AIA as Oral paper! |

Selected Publications

-

-

Computer Agent Arena: Compare & Test Computer Use Agents on Crowdsourced Real-World TasksJul 2025

Computer Agent Arena: Compare & Test Computer Use Agents on Crowdsourced Real-World TasksJul 2025