Xinyuan Wang

Hi! I am Xinyuan Wang (王心远).

I am a Ph.D. student at HKU, mentored by Prof. Tao Yu. I obtained my master’s degree from University of California, San Diego (UCSD). I was luckily to be mentored two distinguished professors at UCSD in Natural Language Processing and Computer Vision - Prof. Zhiting Hu and Prof. Zhuowen Tu. Prior to my study at UCSD, I graduated from Central South University (CSU) in Hunan, China, where I was mentored by Prof. Ying Zhao.

Research Interests

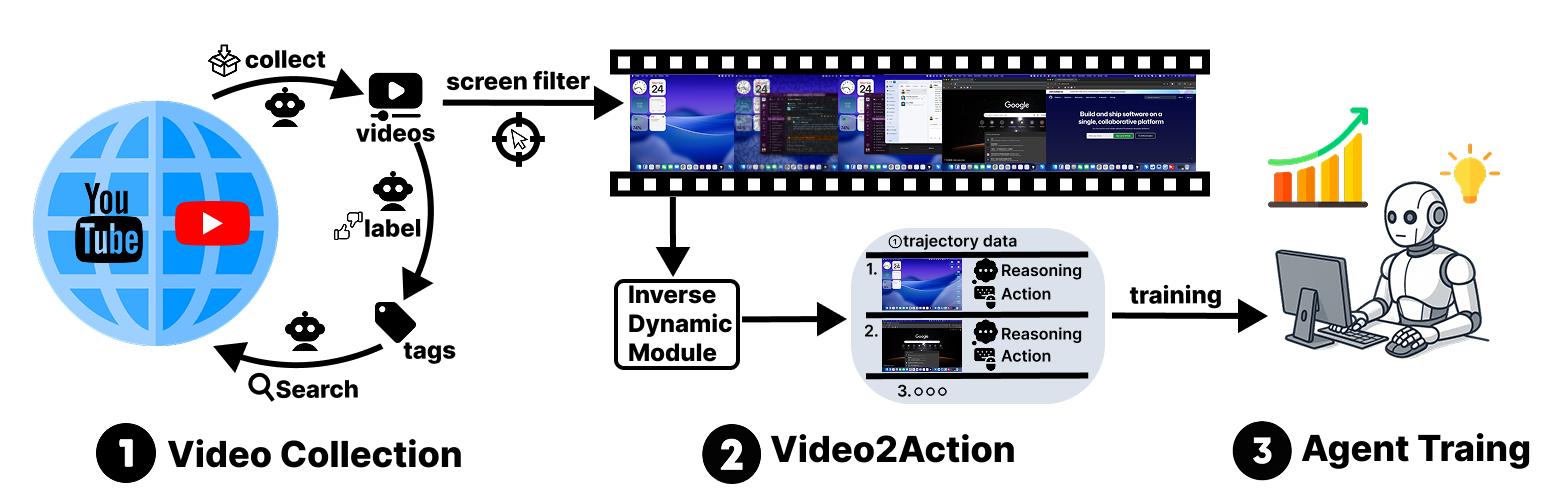

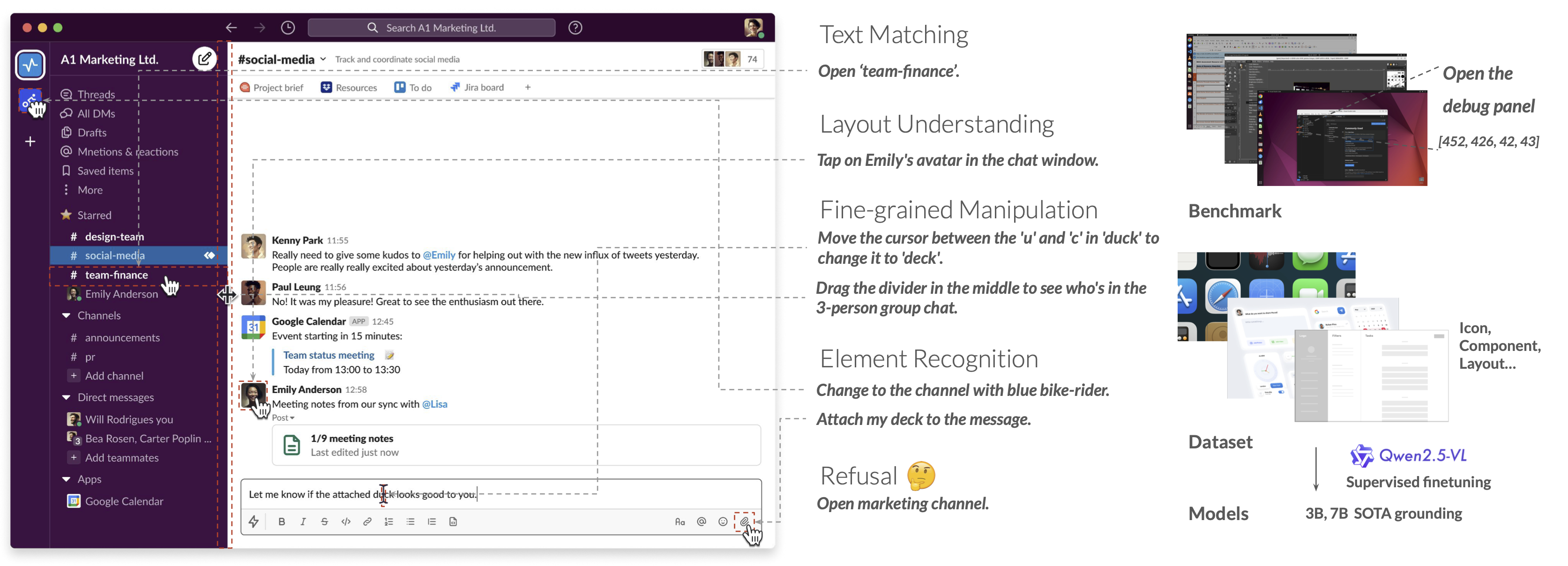

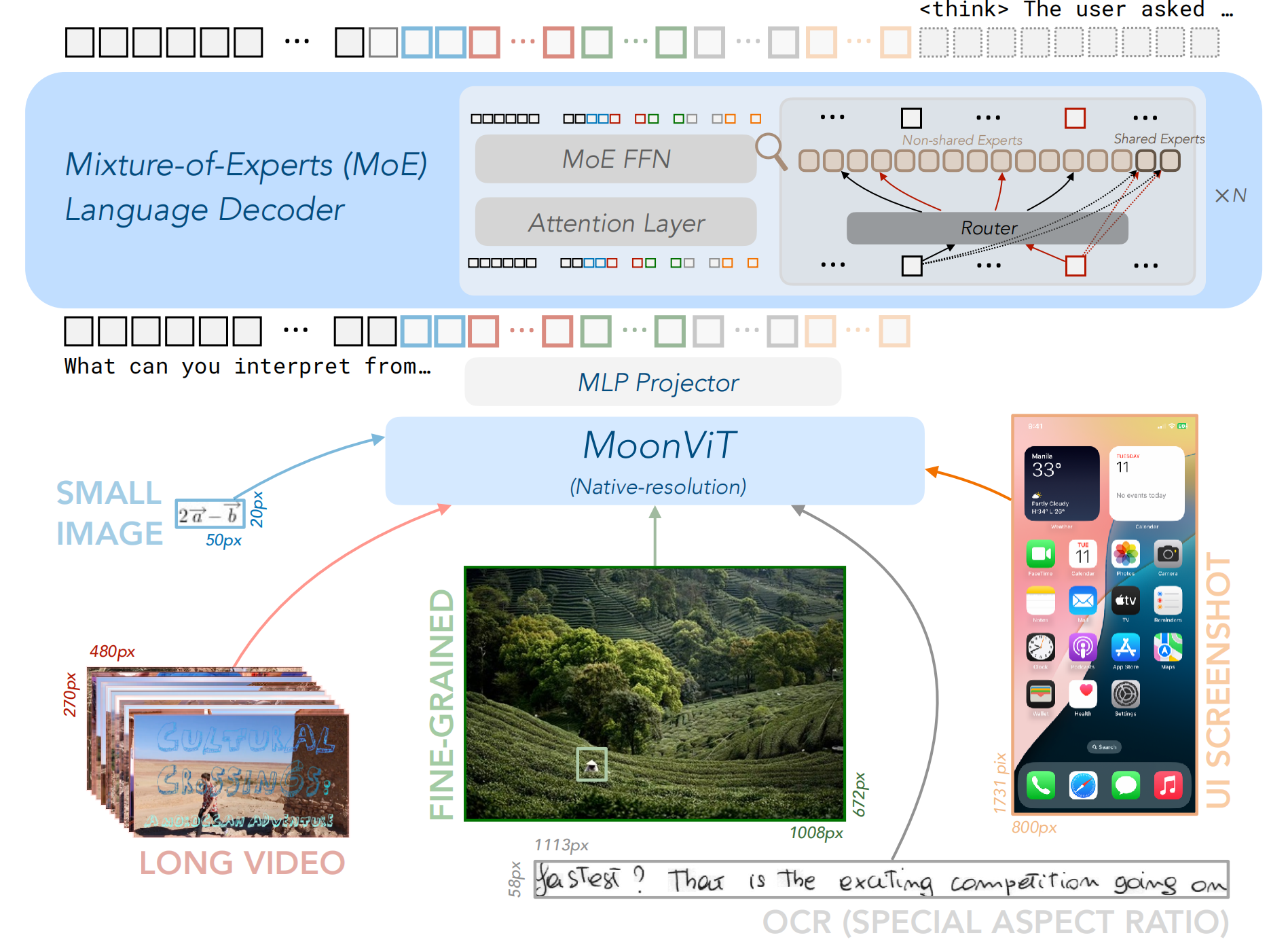

- Agent Foundation Model: Designing and developing LLM/VLM based agent foundation model capable of interpreting and executing actions across real-world, digital, and simulated environments (OpenCUA, Kimi-VL).

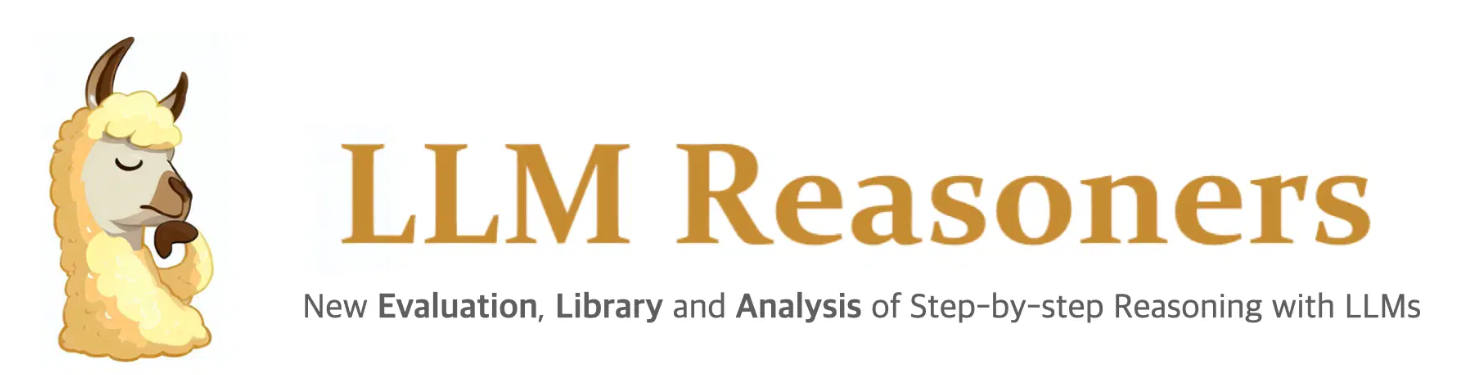

- Language Model Reasoning: Improving the planning, reasoning, decision-making capability of VLM/LLMs . (LLM Reasoners)

- Foundation Model Prompting: Employing interpretable prompting to bridge the domain gap between user objectives and the outputs of foundation models. Effectively boosting the performance of foundation models on complex tasks through efficient and effective prompting. (PromptAgent)

Research Overview

I am now working on agentic foundation models, expecially computer-use agent models, including OpenCUA and Kimi-VL. At UCSD, I worked on automatic LLM prompt optimization (PromptAgent: Strategic Planning with Language Models Enables Expert-level Prompt Optimization) and LLM Reasoning (LLM Reasoners). I also worked in Prof. Zhuowen Tu’s group, exploring how to improve diffusion models’ conceptual performance with an end-to-end loss. During my undergraduate years, I was mentored by Prof. Ying Zhao and worked on Interpretation of Convolutional Neural Networks and Visualization. Here is my graduate thesis: The Research on The Interpretability Method of DeepNeural Network Based on Average Image

News

| Oct 11, 2025 | 🎉 OpenCUA received the Best Paper Award at the COLM AIA Workshop! |

|---|---|

| Sep 19, 2025 | OpenCUA and Jedi are accepted by NeurIPS as Spotlight paper! |

| Sep 19, 2025 | OpenCUA is accepted by COLM 2025 Workshop AIA as Oral paper! |

| Aug 13, 2025 | OpenCUA: Open Foundations for Computer-Use Agents is published on Arxiv! It is the first open-source foundation for computer-use agents, including infrastructure, dataset, training recipe, model and benchmark. |

| May 19, 2025 | Scaling Computer-Use Grounding via User Interface Decomposition and Synthesis is published on Arxiv! We introduce OSWorld-G, a large-scale grounding benchmark, and Jedi, a strong grounding model. |

Selected Publications

-

-

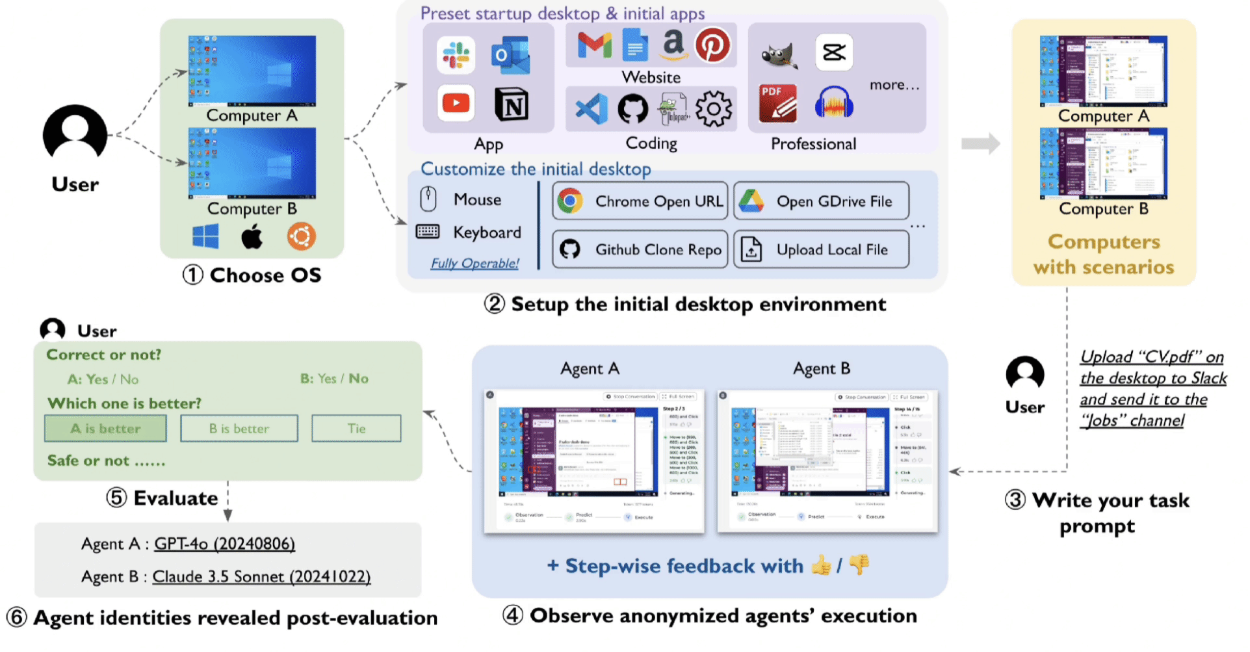

Computer Agent Arena: Compare & Test Computer Use Agents on Crowdsourced Real-World TasksJul 2025

Computer Agent Arena: Compare & Test Computer Use Agents on Crowdsourced Real-World TasksJul 2025